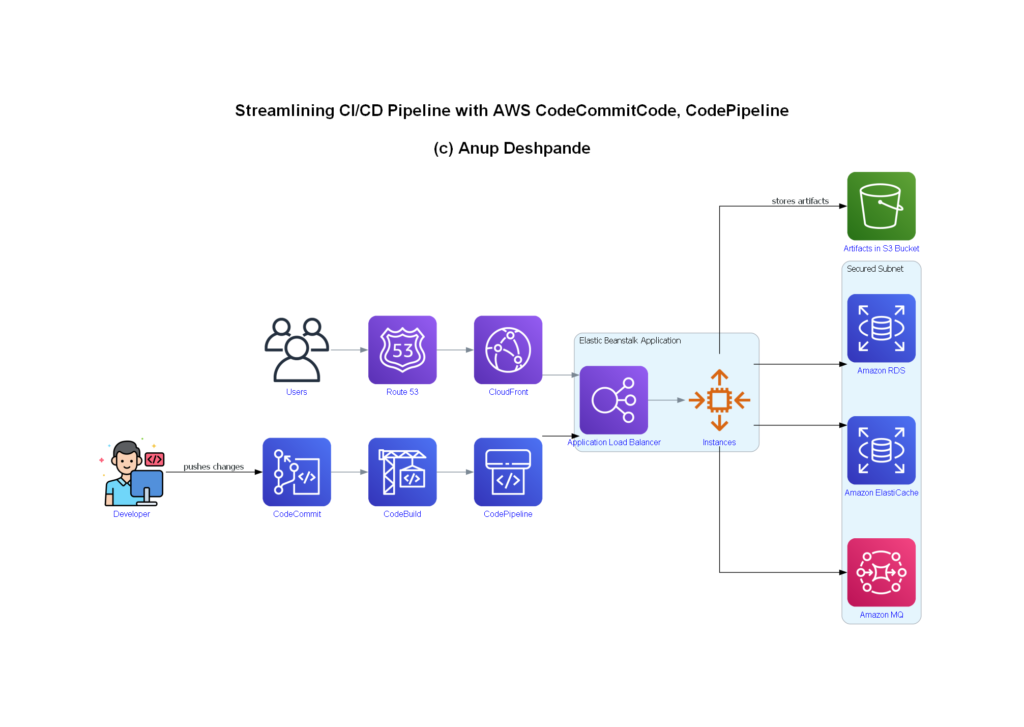

In our previous efforts, we utilized shell scripts to automate the creation of various AWS resources necessary for our web application deployment. While shell scripts served us well, we realized the potential for greater efficiency and flexibility by leveraging Python scripts. This transition allowed us to harness the powerful libraries and better handle exceptions and complex logic.

Our journey began with the need to create a seamless CI/CD pipeline that would facilitate our web application deployment. Initially, shell scripts were used to handle the automation tasks, but we faced limitations in terms of handling complex scenarios and exceptions. Therefore, we transitioned to Python scripts to take advantage of its extensive libraries and better control structures.

In addition to using Python, we decided to utilize AWS CodeCommit as our version control service. AWS CodeCommit provides a secure and scalable environment for storing our source code, and it integrates seamlessly with other AWS services, enhancing our overall CI/CD pipeline.

Situation

The client needed to:

– Enhance the efficiency of their CI/CD pipeline.

– Reduce the time taken for deployments.

– Minimize manual interventions in the deployment process.

– Ensure secure handling of credentials and infrastructure.

Task

Provide a Proof of Concept (POC) demonstrating:

– Integration of AWS CodeCommit, CodeBuild, and CodePipeline for CI/CD.

– Use of Python scripts for automating infrastructure setup.

– Secure management of credentials using AWS Secrets Manager.

Action

1. Creating a Dedicated Security Group for Backend components

For all the backend components such as Database,MQ, Elasticache,etc. which do not need to to be exposed to the public and would interact only internally should be made part of a dedicated Security Group so that their security won’t be compromised. Below python script ensures if the secuity group exists or not & creates accordingly, as all these components would interact internally we need to have an inbound rule to allow traffic from it’s own security group. Hence we are adding a inbound rule in the same script as soon as we create the security group.

Show/Hide Script

import boto3

def create_security_group(group_name, description, vpc_id, region):

ec2 = boto3.client('ec2', region_name=region)

# Check if the security group already exists

existing_sg = ec2.describe_security_groups(

Filters=[{'Name': 'group-name', 'Values': [group_name]}]

)

if existing_sg['SecurityGroups']:

security_group_id = existing_sg['SecurityGroups'][0]['GroupId']

print(f"Security Group {group_name} already exists with ID: {security_group_id}")

else:

# Create the security group

response = ec2.create_security_group(

GroupName=group_name,

Description=description,

VpcId=vpc_id

)

security_group_id = response['GroupId']

print(f"Created Security Group ID: {security_group_id}")

# Check if the inbound rule already exists

ingress_rules = ec2.describe_security_groups(GroupIds=[security_group_id])['SecurityGroups'][0]['IpPermissions']

rule_exists = any(

rule['IpProtocol'] == '-1' and

any(group['GroupId'] == security_group_id for group in rule['UserIdGroupPairs'])

for rule in ingress_rules

)

if rule_exists:

print(f"Inbound rule already exists for Security Group ID: {security_group_id}")

else:

# Add inbound rule to allow all traffic within the same security group

ec2.authorize_security_group_ingress(

GroupId=security_group_id,

IpPermissions=[

{

'IpProtocol': '-1',

'FromPort': -1,

'ToPort': -1,

'UserIdGroupPairs': [{'GroupId': security_group_id}]

}

]

)

print(f"Added inbound rule to Security Group ID: {security_group_id}")

return security_group_id

region = 'ap-south-1'

ec2 = boto3.client('ec2', region_name=region)

vpc_id = ec2.describe_vpcs()['Vpcs'][0]['VpcId']

security_group_id = create_security_group('dmanup-aws-codecomit-backend-secgrp',

'Security group for backend of AWS PaaS demo', vpc_id, region)

2. Creating AWS RDS DB instance

As part of the client project, we have a database(MySQL) to be created, below python script creates the following:

– Parameters Group and Subnet Group for RDS DB

– Security Credentials(username, password) for DB login and stores them in AWS Security Manager

– For Security purpose, public access tpo DB is disabled as part of the creation.

– To create tables, public access at DB level is enabled temporarily, local IP addr is also allowed as inbound in the DB Security Group.

– Once DB is initialized and required tables are created, public access is disabled, local IP addr is removed from inbound rule of Security Group of DB

Show/Hide Script

# Author: Anup Deshpande

# Date: 01-July-2024

# Script to create & setup the AWS RDS DB with MySQL

# All the components are validated first for their existence

# Components are created only if they are not present.

# Script ensures duplicate components are not created even if script is rerun multiple times.

# Parameters Group & Subnet Group for RDS DB are created.

# Once DB is fully up & available, local Client IP is added into the Security Grp of RDS DB.

# RDS DB Public access is temporarily disabled & tables are created by connecting to DB using mysql client

# Post the successful tables creation, public access & inbound access to IP address is removed

import boto3

import pymysql

import json

import time

import os

import requests

def get_or_create_secret(secret_name, region):

client = boto3.client('secretsmanager', region_name=region)

try:

# Check if the secret already exists

response = client.describe_secret(SecretId=secret_name)

# Fetch the secret value

secret_response = client.get_secret_value(SecretId=secret_name)

secret = json.loads(secret_response['SecretString'])

print(f"Secret {secret_name} already exists.")

except client.exceptions.ResourceNotFoundException:

# Generate a secure password

import secrets

password = ''.join(secrets.choice(

'abcdefghijklmnopqrstuvwxyzABCDEFGHIJKLMNOPQRSTUVWXYZ0123456789!#$%&()*+,-./:;<=>?@[]^_`{|}~') for i in

range(16))

secret = {

"username": "admin",

"password": password

}

# Store the secret in AWS Secrets Manager

client.create_secret(

Name=secret_name,

Description="RDS MySQL DB credentials",

SecretString=json.dumps(secret)

)

print(f"Stored new secret {secret_name} in AWS Secrets Manager.")

return secret

def create_or_get_rds_instance(db_instance_identifier, db_instance_class, engine, master_username, master_user_password,

db_name, subnet_group_name, security_group_id, parameter_group_name, region):

rds = boto3.client('rds', region_name=region)

# Check if the RDS instance already exists

try:

response = rds.describe_db_instances(DBInstanceIdentifier=db_instance_identifier)

endpoint = response['DBInstances'][0]['Endpoint']['Address']

print(f"RDS Instance {db_instance_identifier} already exists with endpoint: {endpoint}")

return endpoint

except rds.exceptions.DBInstanceNotFoundFault:

print(f"RDS Instance {db_instance_identifier} does not exist, creating a new one.")

response = rds.create_db_instance(

DBInstanceIdentifier=db_instance_identifier,

DBInstanceClass=db_instance_class,

Engine=engine,

MasterUsername=master_username,

MasterUserPassword=master_user_password,

AllocatedStorage=20,

DBSubnetGroupName=subnet_group_name,

VpcSecurityGroupIds=[security_group_id],

DBParameterGroupName=parameter_group_name,

BackupRetentionPeriod=0,

PubliclyAccessible=True, # Ensure the RDS instance is publicly accessible

Port=3306,

DBName=db_name,

EngineVersion='8.0',

StorageType='gp2'

)

rds.get_waiter('db_instance_available').wait(DBInstanceIdentifier=db_instance_identifier)

endpoint = rds.describe_db_instances(DBInstanceIdentifier=db_instance_identifier)['DBInstances'][0]['Endpoint'][

'Address']

print(f"Created RDS Endpoint: {endpoint}")

return endpoint

def modify_public_access(db_instance_identifier, public_access, region):

rds = boto3.client('rds', region_name=region)

response = rds.modify_db_instance(

DBInstanceIdentifier=db_instance_identifier,

PubliclyAccessible=public_access,

ApplyImmediately=True

)

rds.get_waiter('db_instance_available').wait(DBInstanceIdentifier=db_instance_identifier)

print(f"Set PubliclyAccessible to {public_access} for RDS instance {db_instance_identifier}")

def create_subnet_group(subnet_group_name, subnet_ids, region):

rds = boto3.client('rds', region_name=region)

try:

rds.describe_db_subnet_groups(DBSubnetGroupName=subnet_group_name)

print(f"Subnet group {subnet_group_name} already exists.")

except rds.exceptions.DBSubnetGroupNotFoundFault:

rds.create_db_subnet_group(

DBSubnetGroupName=subnet_group_name,

DBSubnetGroupDescription="Subnet group for AWS PaaS demo RDS DB",

SubnetIds=subnet_ids

)

print(f"Created subnet group: {subnet_group_name}")

def create_parameter_group(parameter_group_name, region):

rds = boto3.client('rds', region_name=region)

try:

rds.describe_db_parameter_groups(DBParameterGroupName=parameter_group_name)

print(f"Parameter group {parameter_group_name} already exists.")

except rds.exceptions.DBParameterGroupNotFoundFault:

rds.create_db_parameter_group(

DBParameterGroupName=parameter_group_name,

DBParameterGroupFamily='mysql8.0',

Description="Parameter group for AWS PaaS demo RDS DB"

)

print(f"Created parameter group: {parameter_group_name}")

def add_inbound_rule(security_group_id, ip_address, region):

ec2 = boto3.client('ec2', region_name=region)

try:

ec2.authorize_security_group_ingress(

GroupId=security_group_id,

IpProtocol='tcp',

FromPort=3306,

ToPort=3306,

CidrIp=f'{ip_address}/32'

)

print(f"Added inbound rule to Security Group ID: {security_group_id} for IP: {ip_address}")

except ec2.exceptions.ClientError as e:

if 'InvalidPermission.Duplicate' in str(e):

print(f"Inbound rule for IP {ip_address} already exists in Security Group ID: {security_group_id}")

def remove_inbound_rule(security_group_id, ip_address, region):

ec2 = boto3.client('ec2', region_name=region)

ec2.revoke_security_group_ingress(

GroupId=security_group_id,

IpProtocol='tcp',

FromPort=3306,

ToPort=3306,

CidrIp=f'{ip_address}/32'

)

print(f"Removed inbound rule from Security Group ID: {security_group_id} for IP: {ip_address}")

def run_sql_file(endpoint, username, password, db_name, sql_file_path):

connection = pymysql.connect(

host=endpoint,

user=username,

password=password,

database=db_name

)

cursor = connection.cursor()

with open(sql_file_path, 'r') as file:

sql_commands = file.read().split(';')

for command in sql_commands:

if command.strip():

cursor.execute(command)

connection.commit()

# Validate SQL execution by running "SHOW TABLES"

cursor.execute("SHOW TABLES;")

tables = cursor.fetchall()

print("Tables in the database:", tables)

cursor.close()

connection.close()

print("Executed SQL file successfully.")

region = 'ap-south-1'

secret_name = 'RDSDB_Credentials1'

db_instance_identifier = 'dmanup-aws-codecomit-demo-rdsdb'

db_instance_class = 'db.t3.micro'

engine = 'mysql'

db_name = 'accounts'

subnet_group_name = 'dmanup-aws-codecomit-demo-db-subnt'

parameter_group_name = 'dmanup-aws-codecomit-demo-rds-db-param-grp'

# Fetch credentials from Secrets Manager or create new ones

secret = get_or_create_secret(secret_name, region)

master_username = secret['username']

master_user_password = secret['password']

# Create or get the RDS instance

ec2 = boto3.client('ec2', region_name=region)

vpc_id = ec2.describe_vpcs()['Vpcs'][0]['VpcId']

subnet_ids = [subnet['SubnetId'] for subnet in

ec2.describe_subnets(Filters=[{'Name': 'vpc-id', 'Values': [vpc_id]}])['Subnets']]

create_subnet_group(subnet_group_name, subnet_ids, region)

create_parameter_group(parameter_group_name, region)

# Fetch the security group ID

security_group = ec2.describe_security_groups(

Filters=[{'Name': 'group-name', 'Values': ['dmanup-aws-codecomit-backend-secgrp']}])

if security_group['SecurityGroups']:

security_group_id = security_group['SecurityGroups'][0]['GroupId']

print(f"Security Group ID: {security_group_id}")

else:

raise Exception("Security group 'dmanup-aws-codecomit-backend-secgrp' not found")

rds_endpoint = create_or_get_rds_instance(db_instance_identifier, db_instance_class, engine, master_username,

master_user_password, db_name, subnet_group_name, security_group_id,

parameter_group_name, region)

# Modify RDS instance to enable public access

modify_public_access(db_instance_identifier, True, region)

# Get public IP address

local_ip = requests.get('https://checkip.amazonaws.com').text.strip()

# Add inbound rule to security group for local IP

add_inbound_rule(security_group_id, local_ip, region)

# Run SQL file to set up the database

sql_file_path = os.path.join(os.getcwd(), 'src', 'main', 'resources', 'db_backup.sql')

run_sql_file(rds_endpoint, master_username, master_user_password, db_name, sql_file_path)

# Remove local IP from the security group

remove_inbound_rule(security_group_id, local_ip, region)

# Modify RDS instance to disable public access

modify_public_access(db_instance_identifier, False, region)

AWS MQ Broker

Show/Hide Script

import boto3

import json

import time

import requests

def get_or_create_secret(secret_name, region):

client = boto3.client('secretsmanager', region_name=region)

try:

# Check if the secret already exists

response = client.describe_secret(SecretId=secret_name)

# Fetch the secret value

secret_response = client.get_secret_value(SecretId=secret_name)

secret = json.loads(secret_response['SecretString'])

print(f"Secret {secret_name} already exists.")

except client.exceptions.ResourceNotFoundException:

# Generate a secure password

import secrets

password = ''.join(secrets.choice(

'abcdefghijklmnopqrstuvwxyzABCDEFGHIJKLMNOPQRSTUVWXYZ0123456789!#$%&()*+,-./:;<=>?@[]^_`{|}~') for i in

range(16))

secret = {

"username": "admin",

"password": password

}

# Store the secret in AWS Secrets Manager

client.create_secret(

Name=secret_name,

Description="MQ broker credentials",

SecretString=json.dumps(secret)

)

print(f"Stored new secret {secret_name} in AWS Secrets Manager.")

return secret

def create_or_get_mq_broker(broker_name, broker_instance_type, engine_version, subnet_id, security_group_id, region):

mq = boto3.client('mq', region_name=region)

# Check if the broker already exists

try:

response = mq.describe_broker(BrokerId=broker_name)

broker_id = response['BrokerId']

print(f"MQ Broker {broker_name} already exists with ID: {broker_id}")

return broker_id

except mq.exceptions.NotFoundException:

print(f"MQ Broker {broker_name} does not exist, creating a new one.")

secret = get_or_create_secret('RabbitMQ_Credentials', region)

username = secret['username']

password = secret['password']

broker_params = {

'BrokerName': broker_name,

'DeploymentMode': 'SINGLE_INSTANCE',

'EngineType': 'RabbitMQ',

'EngineVersion': engine_version,

'HostInstanceType': broker_instance_type,

'SubnetIds': [subnet_id],

'SecurityGroups': [security_group_id],

'Users': [{"Username": username, "Password": password}],

'PubliclyAccessible': False

}

#print("Broker parameters:")

#print(json.dumps(broker_params, indent=2))

broker_response = mq.create_broker(**broker_params)

broker_id = broker_response['BrokerId']

print(f"Creating MQ Broker {broker_name} with ID: {broker_id}")

while True:

status = mq.describe_broker(BrokerId=broker_id)['BrokerState']

print(f"Current Broker Status: {status}")

if status == 'RUNNING':

break

time.sleep(30)

print(f"MQ Broker {broker_name} is now RUNNING with ID: {broker_id}")

return broker_id

region = 'ap-south-1'

broker_name = 'dmanup-aws-codecomit-demo-mq-broker'

broker_instance_type = 'mq.t3.micro'

engine_version = '3.8.22'

security_group_name = 'dmanup-aws-codecomit-backend-secgrp'

# Fetch the security group ID

ec2 = boto3.client('ec2', region_name=region)

security_group = ec2.describe_security_groups(Filters=[{'Name': 'group-name', 'Values': [security_group_name]}])

if security_group['SecurityGroups']:

security_group_id = security_group['SecurityGroups'][0]['GroupId']

print(f"Security Group ID: {security_group_id}")

else:

raise Exception(f"Security group '{security_group_name}' not found")

# Fetch VPC ID

vpc_id = ec2.describe_vpcs()['Vpcs'][0]['VpcId']

print(f"VPC ID: {vpc_id}")

# Fetch Subnet IDs

subnet_ids = [subnet['SubnetId'] for subnet in

ec2.describe_subnets(Filters=[{'Name': 'vpc-id', 'Values': [vpc_id]}])['Subnets']]

print(f"Subnet IDs: {subnet_ids}")

# Select a single subnet for SINGLE_INSTANCE deployment mode

subnet_id = subnet_ids[0]

# Create or get the MQ broker

create_or_get_mq_broker(broker_name, broker_instance_type, engine_version, subnet_id, security_group_id, region)

AWS Elasticache Creation

Show/Hide Script

import boto3

import json

import time

def create_or_get_elasticache_cluster(cluster_id, node_type, engine, num_cache_nodes, subnet_group_name,

security_group_id, region):

elasticache = boto3.client('elasticache', region_name=region)

# Check if the Elasticache cluster already exists

try:

response = elasticache.describe_cache_clusters(CacheClusterId=cluster_id, ShowCacheNodeInfo=True)

endpoint = response['CacheClusters'][0].get('CacheNodes', [{}])[0].get('Endpoint')

if endpoint:

print(

f"Elasticache Cluster {cluster_id} already exists with endpoint: {endpoint['Address']}:{endpoint['Port']}")

return endpoint

else:

print(f"Elasticache Cluster {cluster_id} already exists but endpoint information is not available yet.")

return None

except elasticache.exceptions.CacheClusterNotFoundFault:

print(f"Elasticache Cluster {cluster_id} does not exist, creating a new one.")

# Create the Elasticache cluster

response = elasticache.create_cache_cluster(

CacheClusterId=cluster_id,

CacheNodeType=node_type,

Engine=engine,

NumCacheNodes=num_cache_nodes,

CacheSubnetGroupName=subnet_group_name,

SecurityGroupIds=[security_group_id],

EngineVersion='1.6.17',

Port=11211

)

# Wait for the cluster to become available

while True:

response = elasticache.describe_cache_clusters(CacheClusterId=cluster_id, ShowCacheNodeInfo=True)

status = response['CacheClusters'][0]['CacheClusterStatus']

print(f"Current Elasticache Cluster Status: {status}")

if status == 'available':

endpoint = response['CacheClusters'][0].get('CacheNodes', [{}])[0].get('Endpoint')

if endpoint:

print(

f"Elasticache Cluster {cluster_id} is now available with endpoint: {endpoint['Address']}:{endpoint['Port']}")

return endpoint

else:

print(f"Elasticache Cluster {cluster_id} is available but endpoint information is not available yet.")

return None

time.sleep(30)

region = 'ap-south-1'

cluster_id = 'dmanup-aws-codecomit-demo-elasticache'

node_type = 'cache.t3.micro'

engine = 'memcached'

num_cache_nodes = 1

security_group_name = 'dmanup-aws-codecomit-backend-secgrp'

subnet_group_name = 'dmanup-aws-codecomit-demo-elasticache-subnet-group'

# Fetch the security group ID

ec2 = boto3.client('ec2', region_name=region)

security_group = ec2.describe_security_groups(Filters=[{'Name': 'group-name', 'Values': [security_group_name]}])

if security_group['SecurityGroups']:

security_group_id = security_group['SecurityGroups'][0]['GroupId']

print(f"Security Group ID: {security_group_id}")

else:

raise Exception(f"Security group '{security_group_name}' not found")

# Fetch VPC ID

vpc_id = ec2.describe_vpcs()['Vpcs'][0]['VpcId']

print(f"VPC ID: {vpc_id}")

# Fetch Subnet IDs

subnet_ids = [subnet['SubnetId'] for subnet in

ec2.describe_subnets(Filters=[{'Name': 'vpc-id', 'Values': [vpc_id]}])['Subnets']]

print(f"Subnet IDs: {subnet_ids}")

# Create the subnet group

elasticache = boto3.client('elasticache', region_name=region)

try:

elasticache.describe_cache_subnet_groups(CacheSubnetGroupName=subnet_group_name)

print(f"Subnet group {subnet_group_name} already exists.")

except elasticache.exceptions.CacheSubnetGroupNotFoundFault:

elasticache.create_cache_subnet_group(

CacheSubnetGroupName=subnet_group_name,

CacheSubnetGroupDescription="Subnet group for AWS CodeCommit demo Elasticache",

SubnetIds=subnet_ids

)

print(f"Created subnet group: {subnet_group_name}")

# Create or get the Elasticache cluster

create_or_get_elasticache_cluster(cluster_id, node_type, engine, num_cache_nodes, subnet_group_name, security_group_id,

region)

Updating Application properties

Show/Hide Script

import boto3

import json

def get_secret(secret_name, region):

client = boto3.client('secretsmanager', region_name=region)

try:

# Fetch the secret value

secret_response = client.get_secret_value(SecretId=secret_name)

secret = json.loads(secret_response['SecretString'])

print(f"Fetched secret {secret_name} from AWS Secrets Manager.")

return secret

except client.exceptions.ResourceNotFoundException:

print(f"Secret {secret_name} not found.")

return None

def get_rds_endpoint(db_instance_identifier, region):

rds = boto3.client('rds', region_name=region)

try:

response = rds.describe_db_instances(DBInstanceIdentifier=db_instance_identifier)

endpoint = response['DBInstances'][0]['Endpoint']['Address']

print(f"Fetched RDS endpoint: {endpoint}")

return endpoint

except rds.exceptions.DBInstanceNotFoundFault:

print(f"RDS instance {db_instance_identifier} not found.")

return None

def get_mq_endpoint(broker_name, region):

mq = boto3.client('mq', region_name=region)

try:

response = mq.describe_broker(BrokerId=broker_name)

endpoint = response['BrokerInstances'][0]['Endpoints'][0]

print(f"Fetched MQ endpoint: {endpoint}")

return endpoint

except mq.exceptions.NotFoundException:

print(f"MQ broker {broker_name} not found.")

return None

def get_cache_endpoint(cluster_id, region):

elasticache = boto3.client('elasticache', region_name=region)

try:

response = elasticache.describe_cache_clusters(CacheClusterId=cluster_id, ShowCacheNodeInfo=True)

endpoint = response['CacheClusters'][0]['CacheNodes'][0]['Endpoint']

print(f"Fetched Cache endpoint: {endpoint['Address']}:{endpoint['Port']}")

return endpoint

except elasticache.exceptions.CacheClusterNotFoundFault:

print(f"Elasticache cluster {cluster_id} not found.")

return None

def update_properties_file(file_path, db_instance_identifier, broker_name, cache_cluster_id, region):

rds_secret = get_secret('RDSDB_Credentials1', region)

mq_secret = get_secret('RabbitMQ_Credentials', region)

if not rds_secret or not mq_secret:

print("Required secrets not found. Exiting.")

return

db_endpoint = get_rds_endpoint(db_instance_identifier, region)

mq_endpoint = get_mq_endpoint(broker_name, region)

cache_endpoint = get_cache_endpoint(cache_cluster_id, region)

if not db_endpoint or not mq_endpoint or not cache_endpoint:

print("Required components not found. Exiting.")

return

with open(file_path, 'r') as file:

properties = file.readlines()

# Update the properties

new_properties = []

for line in properties:

if line.startswith("jdbc.url"):

new_properties.append(

f"jdbc.url=jdbc:mysql://{db_endpoint}:3306/accounts?useUnicode=true&characterEncoding=UTF-8&zeroDateTimeBehavior=convertToNull\n")

elif line.startswith("jdbc.username"):

new_properties.append(f"jdbc.username={rds_secret['username']}\n")

elif line.startswith("jdbc.password"):

new_properties.append(f"jdbc.password={rds_secret['password']}\n")

elif line.startswith("memcached.active.host"):

new_properties.append(f"memcached.active.host={cache_endpoint['Address']}\n")

elif line.startswith("memcached.active.port"):

new_properties.append(f"memcached.active.port={cache_endpoint['Port']}\n")

elif line.startswith("rabbitmq.address"):

new_properties.append(f"rabbitmq.address={mq_endpoint}\n")

elif line.startswith("rabbitmq.username"):

new_properties.append(f"rabbitmq.username={mq_secret['username']}\n")

elif line.startswith("rabbitmq.password"):

new_properties.append(f"rabbitmq.password={mq_secret['password']}\n")

else:

new_properties.append(line)

with open(file_path, 'w') as file:

file.writelines(new_properties)

print(f"Updated {file_path} with new properties.")

region = 'ap-south-1'

db_instance_identifier = 'dmanup-aws-codecomit-demo-rdsdb' # Replace with your actual RDS instance identifier

broker_name = 'dmanup-aws-codecomit-demo-mq-broker' # Replace with your actual MQ broker name

cache_cluster_id = 'dmanup-aws-codecomit-demo-elasticache' # Replace with your actual Cache cluster ID

file_path = 'D:/devops_articles/aws-code-commit/vprofile-project/src/main/resources/application.properties'

# Update the application properties file

update_properties_file(file_path, db_instance_identifier, broker_name, cache_cluster_id, region)

Creation of Elastic Beanstalk

Show/Hide Script

import boto3

import json

import time

import os

# Set the region

REGION = "ap-south-1"

# Initialize boto3 clients

ec2 = boto3.client('ec2', region_name=REGION)

s3 = boto3.client('s3', region_name=REGION)

iam = boto3.client('iam', region_name=REGION)

elasticbeanstalk = boto3.client('elasticbeanstalk', region_name=REGION)

sts = boto3.client('sts', region_name=REGION)

# Get AWS account ID

AWS_ACCOUNT_ID = sts.get_caller_identity()["Account"]

KEY_PAIR_NAME = "dmanup-aws-codecomit-demo-keypair"

KEY_PAIR_FILE = f"{KEY_PAIR_NAME}.pem"

LOCAL_PATH = "D:/devops_articles/aws-code-commit/vprofile-project/target/vprofile-v2.war"

S3_BUCKET = "dmanup-aws-codecomit-demo-bucket"

S3_KEY = "vprofile-v2.war"

DESTINATION_PATH = f"s3://{S3_BUCKET}/{S3_KEY}"

# Create or use existing key pair

try:

ec2.describe_key_pairs(KeyNames=[KEY_PAIR_NAME])

print(f"Key pair already exists: {KEY_PAIR_NAME}")

except ec2.exceptions.ClientError:

key_pair = ec2.create_key_pair(KeyName=KEY_PAIR_NAME)

with open(KEY_PAIR_FILE, 'w') as file:

file.write(key_pair['KeyMaterial'])

os.chmod(KEY_PAIR_FILE, 0o400)

print(f"Created key pair: {KEY_PAIR_NAME}")

# Fetch the default VPC ID

DEFAULT_VPC_ID = ec2.describe_vpcs(Filters=[{'Name': 'isDefault', 'Values': ['true']}])['Vpcs'][0]['VpcId']

print(f"Fetched Default VPC ID: {DEFAULT_VPC_ID}")

# Fetch the subnet IDs for the default VPC

subnets = ec2.describe_subnets(Filters=[{'Name': 'vpc-id', 'Values': [DEFAULT_VPC_ID]}])['Subnets']

SUBNET_IDS = ",".join([subnet['SubnetId'] for subnet in subnets])

print(f"Fetched Subnet IDs: {SUBNET_IDS}")

# Create or use existing S3 bucket

try:

s3.head_bucket(Bucket=S3_BUCKET)

print(f"S3 bucket already exists: {S3_BUCKET}")

except s3.exceptions.ClientError:

s3.create_bucket(Bucket=S3_BUCKET, CreateBucketConfiguration={'LocationConstraint': REGION})

print(f"Created S3 bucket: {S3_BUCKET}")

# Create or use existing IAM Role for Elastic Beanstalk Service

try:

iam.get_role(RoleName="aws-elasticbeanstalk-service-role")

print("IAM Role already exists: aws-elasticbeanstalk-service-role")

except iam.exceptions.NoSuchEntityException:

iam.create_role(

RoleName="aws-elasticbeanstalk-service-role",

AssumeRolePolicyDocument=json.dumps({

"Version": "2012-10-17",

"Statement": [{

"Effect": "Allow",

"Principal": {"Service": "elasticbeanstalk.amazonaws.com"},

"Action": "sts:AssumeRole"

}]

})

)

print("Created IAM Role: aws-elasticbeanstalk-service-role")

# Attach the AWS managed policies to the service role

iam.attach_role_policy(RoleName="aws-elasticbeanstalk-service-role", PolicyArn="arn:aws:iam::aws:policy/service-role/AWSElasticBeanstalkEnhancedHealth")

iam.attach_role_policy(RoleName="aws-elasticbeanstalk-service-role", PolicyArn="arn:aws:iam::aws:policy/AWSElasticBeanstalkManagedUpdatesCustomerRolePolicy")

print("Attached policies to IAM Role: aws-elasticbeanstalk-service-role")

# Create or use existing Instance Profile for Elastic Beanstalk EC2 Instances

try:

iam.get_instance_profile(InstanceProfileName="aws-elasticbeanstalk-ec2-role")

print("Instance Profile already exists: aws-elasticbeanstalk-ec2-role")

except iam.exceptions.NoSuchEntityException:

iam.create_instance_profile(InstanceProfileName="aws-elasticbeanstalk-ec2-role")

print("Created Instance Profile: aws-elasticbeanstalk-ec2-role")

# Create or use existing IAM Role for EC2 Instances

try:

iam.get_role(RoleName="aws-elasticbeanstalk-ec2-role")

print("IAM Role already exists: aws-elasticbeanstalk-ec2-role")

except iam.exceptions.NoSuchEntityException:

iam.create_role(

RoleName="aws-elasticbeanstalk-ec2-role",

AssumeRolePolicyDocument=json.dumps({

"Version": "2012-10-17",

"Statement": [{

"Effect": "Allow",

"Principal": {"Service": "ec2.amazonaws.com"},

"Action": "sts:AssumeRole"

}]

})

)

print("Created IAM Role: aws-elasticbeanstalk-ec2-role")

# Attach the AWS managed policies to the instance role

iam.attach_role_policy(RoleName="aws-elasticbeanstalk-ec2-role", PolicyArn="arn:aws:iam::aws:policy/AWSElasticBeanstalkWebTier")

iam.attach_role_policy(RoleName="aws-elasticbeanstalk-ec2-role", PolicyArn="arn:aws:iam::aws:policy/AWSElasticBeanstalkWorkerTier")

print("Attached policies to IAM Role: aws-elasticbeanstalk-ec2-role")

# Attach the role to the instance profile

instance_profiles = iam.list_instance_profiles_for_role(RoleName="aws-elasticbeanstalk-ec2-role")['InstanceProfiles']

if not any(profile['InstanceProfileName'] == "aws-elasticbeanstalk-ec2-role" for profile in instance_profiles):

iam.add_role_to_instance_profile(InstanceProfileName="aws-elasticbeanstalk-ec2-role", RoleName="aws-elasticbeanstalk-ec2-role")

print("Attached Role to Instance Profile: aws-elasticbeanstalk-ec2-role")

else:

print("Role already attached to Instance Profile: aws-elasticbeanstalk-ec2-role")

# Create an Elastic Beanstalk application

try:

elasticbeanstalk.create_application(

ApplicationName="dmanup-aws-codecomit-demo-app",

Description="Demo application for AWS PaaS"

)

print("Created Elastic Beanstalk Application: dmanup-aws-codecomit-demo-app")

except elasticbeanstalk.exceptions.TooManyApplicationsException:

print("Elastic Beanstalk Application already exists: dmanup-aws-codecomit-demo-app")

# Upload the application code to S3

s3.upload_file(LOCAL_PATH, S3_BUCKET, S3_KEY)

print(f"Uploaded application code to S3: {DESTINATION_PATH}")

# Create an application version

elasticbeanstalk.create_application_version(

ApplicationName="dmanup-aws-codecomit-demo-app",

VersionLabel="v1",

SourceBundle={

"S3Bucket": S3_BUCKET,

"S3Key": S3_KEY

}

)

print("Created Application Version: v1")

# Create the configuration options file

options = [

{

"Namespace": "aws:autoscaling:launchconfiguration",

"OptionName": "InstanceType",

"Value": "t3.micro"

},

{

"Namespace": "aws:autoscaling:launchconfiguration",

"OptionName": "EC2KeyName",

"Value": KEY_PAIR_NAME

},

{

"Namespace": "aws:elasticbeanstalk:environment",

"OptionName": "EnvironmentType",

"Value": "LoadBalanced"

},

{

"Namespace": "aws:autoscaling:asg",

"OptionName": "MinSize",

"Value": "2"

},

{

"Namespace": "aws:autoscaling:asg",

"OptionName": "MaxSize",

"Value": "3"

},

{

"Namespace": "aws:autoscaling:trigger",

"OptionName": "MeasureName",

"Value": "NetworkOut"

},

{

"Namespace": "aws:autoscaling:trigger",

"OptionName": "Statistic",

"Value": "Average"

},

{

"Namespace": "aws:autoscaling:trigger",

"OptionName": "Unit",

"Value": "Bytes"

},

{

"Namespace": "aws:autoscaling:trigger",

"OptionName": "Period",

"Value": "300"

},

{

"Namespace": "aws:autoscaling:trigger",

"OptionName": "BreachDuration",

"Value": "300"

},

{

"Namespace": "aws:ec2:vpc",

"OptionName": "VPCId",

"Value": DEFAULT_VPC_ID

},

{

"Namespace": "aws:ec2:vpc",

"OptionName": "Subnets",

"Value": SUBNET_IDS

},

{

"Namespace": "aws:elasticbeanstalk:environment:process:default",

"OptionName": "StickinessEnabled",

"Value": "true"

},

{

"Namespace": "aws:elasticbeanstalk:environment:process:default",

"OptionName": "StickinessLBCookieDuration",

"Value": "86400"

},

{

"Namespace": "aws:elasticbeanstalk:environment:process:default",

"OptionName": "HealthCheckPath",

"Value": "/login"

},

{

"Namespace": "aws:elasticbeanstalk:healthreporting:system",

"OptionName": "SystemType",

"Value": "basic"

},

{

"Namespace": "aws:elasticbeanstalk:application",

"OptionName": "Application Healthcheck URL",

"Value": "/login"

},

{

"Namespace": "aws:elasticbeanstalk:managedactions",

"OptionName": "ManagedActionsEnabled",

"Value": "false"

},

{

"Namespace": "aws:elasticbeanstalk:command",

"OptionName": "DeploymentPolicy",

"Value": "Rolling"

},

{

"Namespace": "aws:elasticbeanstalk:command",

"OptionName": "BatchSizeType",

"Value": "Fixed"

},

{

"Namespace": "aws:elasticbeanstalk:command",

"OptionName": "BatchSize",

"Value": "1"

},

{

"Namespace": "aws:elasticbeanstalk:sns:topics",

"OptionName": "Notification Endpoint",

"Value": "anupde@gmail.com"

},

{

"Namespace": "aws:elasticbeanstalk:environment",

"OptionName": "ServiceRole",

"Value": "aws-elasticbeanstalk-service-role"

},

{

"Namespace": "aws:autoscaling:launchconfiguration",

"OptionName": "IamInstanceProfile",

"Value": "aws-elasticbeanstalk-ec2-role"

}

]

with open('options.json', 'w') as file:

json.dump(options, file)

print("Created Configuration Options file: options.json")

# Get the solution stack name for the Elastic Beanstalk environment

solution_stack_name = next(

stack for stack in elasticbeanstalk.list_available_solution_stacks()['SolutionStacks']

if "64bit Amazon Linux 2" in stack and "Corretto 11" in stack and "Tomcat 8.5" in stack

)

print(f"Solution Stack Name/Platform version:{solution_stack_name}")

# Create an Elastic Beanstalk environment

elasticbeanstalk.create_environment(

ApplicationName="dmanup-aws-codecomit-demo-app",

EnvironmentName="dmanup-aws-codecomit-demo-env",

SolutionStackName=solution_stack_name,

OptionSettings=json.load(open('options.json')),

VersionLabel="v1",

Tier={

"Name": "WebServer",

"Type": "Standard",

"Version": "1.0"

}

)

print("Created Elastic Beanstalk Environment: dmanup-aws-codecomit-demo-env")

# Wait until the environment is available

print("Waiting for the Elastic Beanstalk environment to become available...")

while True:

env_status = elasticbeanstalk.describe_environments(

ApplicationName="dmanup-aws-codecomit-demo-app",

EnvironmentNames=["dmanup-aws-codecomit-demo-env"]

)['Environments'][0]['Status']

print(f"Current Environment Status: {env_status}")

if env_status == "Ready":

break

time.sleep(30)

print("Elastic Beanstalk environment is now available")

# Check if the application was created successfully

app_status = elasticbeanstalk.describe_applications(

ApplicationNames=["dmanup-aws-codecomit-demo-app"]

)['Applications'][0]['ApplicationName']

print(f"Application Status: {app_status}")

# Check if the environment was created successfully

env_status = elasticbeanstalk.describe_environments(

ApplicationName="dmanup-aws-codecomit-demo-app",

EnvironmentNames=["dmanup-aws-codecomit-demo-env"]

)['Environments'][0]['Status']

print(f"Environment Status: {env_status}")

def update_security_group_inbound_rules(env_name, backend_security_group_name, region):

# Initialize boto3 clients

eb = boto3.client('elasticbeanstalk', region_name=region)

autoscaling = boto3.client('autoscaling', region_name=region)

ec2 = boto3.client('ec2', region_name=region)

# Fetch the Auto Scaling group name associated with the Elastic Beanstalk environment

auto_scaling_group_name = eb.describe_environment_resources(

EnvironmentName=env_name

)['EnvironmentResources']['AutoScalingGroups'][0]['Name']

print(f"AUTO_SCALING_GROUP_NAME: {auto_scaling_group_name}")

# Fetch the instance ID from the Auto Scaling group

instance_id = autoscaling.describe_auto_scaling_groups(

AutoScalingGroupNames=[auto_scaling_group_name]

)['AutoScalingGroups'][0]['Instances'][0]['InstanceId']

print(f"INSTANCE_ID: {instance_id}")

# Fetch the security group ID associated with the instance

instance_security_group_id = ec2.describe_instances(

InstanceIds=[instance_id]

)['Reservations'][0]['Instances'][0]['SecurityGroups'][0]['GroupId']

print(f"INSTANCE_SECURITY_GROUP_ID: {instance_security_group_id}")

# Fetch the backend security group ID

backend_security_group_id = ec2.describe_security_groups(

Filters=[{'Name': 'group-name', 'Values': [backend_security_group_name]}]

)['SecurityGroups'][0]['GroupId']

print(f"BACKEND_SECURITY_GROUP_ID: {backend_security_group_id}")

# Add inbound rule to allow MySQL (RDS) traffic on port 3306

ec2.authorize_security_group_ingress(

GroupId=backend_security_group_id,

IpPermissions=[{

'IpProtocol': 'tcp',

'FromPort': 3306,

'ToPort': 3306,

'UserIdGroupPairs': [{'GroupId': instance_security_group_id}]

}]

)

print("Added inbound rule for MySQL (RDS) traffic on port 3306.")

# Add inbound rule to allow Memcached (ElastiCache) traffic on port 11211

ec2.authorize_security_group_ingress(

GroupId=backend_security_group_id,

IpPermissions=[{

'IpProtocol': 'tcp',

'FromPort': 11211,

'ToPort': 11211,

'UserIdGroupPairs': [{'GroupId': instance_security_group_id}]

}]

)

print("Added inbound rule for Memcached (ElastiCache) traffic on port 11211.")

# Add inbound rule to allow RabbitMQ (Amazon MQ) traffic on port 5672

ec2.authorize_security_group_ingress(

GroupId=backend_security_group_id,

IpPermissions=[{

'IpProtocol': 'tcp',

'FromPort': 5672,

'ToPort': 5672,

'UserIdGroupPairs': [{'GroupId': instance_security_group_id}]

}]

)

print("Added inbound rule for RabbitMQ (Amazon MQ) traffic on port 5672.")

# Verification

ip_permissions = ec2.describe_security_groups(

GroupIds=[backend_security_group_id]

)['SecurityGroups'][0]['IpPermissions']

print(f"Updated Inbound Rules: {ip_permissions}")

# Parameters

REGION = "ap-south-1"

ENV_NAME = "dmanup-aws-codecomit-demo-env"

BACKEND_SECURITY_GROUP_NAME = "dmanup-aws-codecomit-backend-secgrp"

# Update security group inbound rules

update_security_group_inbound_rules(ENV_NAME, BACKEND_SECURITY_GROUP_NAME, REGION)

Creation of CodeCommit,Pipeline

Show/Hide Script

import boto3

import json

# Set the region and repository details

REGION = "ap-south-1"

REPO_NAME = "dmanup-aws-codecommit-demo-repo"

BUILD_PROJECT_NAME = "dmanup-aws-codebuild-demo-project"

DEPLOY_APP_NAME = "dmanup-aws-codedeploy-demo-app"

DEPLOY_GROUP_NAME = "dmanup-aws-codedeploy-demo-group"

PIPELINE_NAME = "dmanup-aws-codepipeline-demo"

ARTIFACT_BUCKET = "dmanup-aws-codeartifact-bucket"

ENV_NAME = "dmanup-aws-codecomit-demo-env"

# Initialize boto3 clients

codecommit = boto3.client('codecommit', region_name=REGION)

codebuild = boto3.client('codebuild', region_name=REGION)

codedeploy = boto3.client('codedeploy', region_name=REGION)

codepipeline = boto3.client('codepipeline', region_name=REGION)

iam = boto3.client('iam', region_name=REGION)

s3 = boto3.client('s3', region_name=REGION)

elasticbeanstalk = boto3.client('elasticbeanstalk', region_name=REGION)

ec2 = boto3.client('ec2', region_name=REGION)

# Function to create a CodeCommit repository

def create_codecommit_repo(repo_name):

try:

response = codecommit.create_repository(

repositoryName=repo_name,

repositoryDescription='Demo repository for AWS CodeCommit'

)

print(f"Created CodeCommit repository: {repo_name}")

except codecommit.exceptions.RepositoryNameExistsException:

print(f"CodeCommit repository {repo_name} already exists.")

# Function to create an S3 bucket for artifacts

def create_s3_bucket(bucket_name):

try:

s3.create_bucket(Bucket=bucket_name, CreateBucketConfiguration={'LocationConstraint': REGION})

print(f"Created S3 bucket: {bucket_name}")

except s3.exceptions.BucketAlreadyOwnedByYou:

print(f"S3 bucket {bucket_name} already exists.")

except s3.exceptions.BucketAlreadyExists:

print(f"S3 bucket {bucket_name} already exists.")

# Function to create a CodeBuild project

def create_codebuild_project(project_name, artifact_bucket, role_arn):

try:

codebuild.create_project(

name=project_name,

source={'type': 'CODECOMMIT',

'location': f"https://git-codecommit.{REGION}.amazonaws.com/v1/repos/{REPO_NAME}"},

artifacts={'type': 'S3', 'location': artifact_bucket},

environment={

'type': 'LINUX_CONTAINER',

'image': 'aws/codebuild/standard:5.0',

'computeType': 'BUILD_GENERAL1_SMALL',

'environmentVariables': []

},

serviceRole=role_arn

)

print(f"Created CodeBuild project: {project_name}")

except codebuild.exceptions.ResourceAlreadyExistsException:

print(f"CodeBuild project {project_name} already exists.")

# Function to create a CodeDeploy application and deployment group

def create_codedeploy_app_and_group(app_name, group_name, role_arn):

try:

codedeploy.create_application(applicationName=app_name)

print(f"Created CodeDeploy application: {app_name}")

except codedeploy.exceptions.ApplicationAlreadyExistsException:

print(f"CodeDeploy application {app_name} already exists.")

# Create deployment group

try:

codedeploy.create_deployment_group(

applicationName=app_name,

deploymentGroupName=group_name,

serviceRoleArn=role_arn,

deploymentConfigName='CodeDeployDefault.OneAtATime',

ec2TagFilters=[{'Key': 'Name', 'Value': 'CodeDeployDemo', 'Type': 'KEY_AND_VALUE'}],

autoScalingGroups=[],

deploymentStyle={'deploymentType': 'IN_PLACE', 'deploymentOption': 'WITHOUT_TRAFFIC_CONTROL'}

)

print(f"Created CodeDeploy deployment group: {group_name}")

except codedeploy.exceptions.DeploymentGroupAlreadyExistsException:

print(f"CodeDeploy deployment group {group_name} already exists.")

# Function to create a CodePipeline

def create_codepipeline(pipeline_name, role_arn, artifact_bucket):

try:

response = codepipeline.create_pipeline(

pipeline={

'name': pipeline_name,

'roleArn': role_arn,

'artifactStore': {'type': 'S3', 'location': artifact_bucket},

'stages': [

{

'name': 'Source',

'actions': [

{

'name': 'Source',

'actionTypeId': {

'category': 'Source',

'owner': 'AWS',

'provider': 'CodeCommit',

'version': '1'

},

'outputArtifacts': [{'name': 'SourceOutput'}],

'configuration': {

'RepositoryName': REPO_NAME,

'BranchName': 'main',

'PollForSourceChanges': 'false' # Automatically trigger on changes

},

'runOrder': 1

}

]

},

{

'name': 'Build',

'actions': [

{

'name': 'Build',

'actionTypeId': {

'category': 'Build',

'owner': 'AWS',

'provider': 'CodeBuild',

'version': '1'

},

'inputArtifacts': [{'name': 'SourceOutput'}],

'outputArtifacts': [{'name': 'BuildOutput'}],

'configuration': {'ProjectName': BUILD_PROJECT_NAME},

'runOrder': 1

}

]

},

{

'name': 'Deploy',

'actions': [

{

'name': 'Deploy',

'actionTypeId': {

'category': 'Deploy',

'owner': 'AWS',

'provider': 'CodeDeploy',

'version': '1'

},

'inputArtifacts': [{'name': 'BuildOutput'}],

'configuration': {

'ApplicationName': DEPLOY_APP_NAME,

'DeploymentGroupName': DEPLOY_GROUP_NAME

},

'runOrder': 1

}

]

}

]

}

)

print(f"Created CodePipeline: {pipeline_name}")

except codepipeline.exceptions.PipelineNameInUseException:

print(f"CodePipeline {pipeline_name} already exists.")

# Create IAM roles for CodeBuild, CodeDeploy, and CodePipeline

def create_iam_role(role_name, policy_arn, service_principal):

try:

assume_role_policy_document = json.dumps({

'Version': '2012-10-17',

'Statement': [{

'Effect': 'Allow',

'Principal': {'Service': service_principal},

'Action': 'sts:AssumeRole'

}]

})

role = iam.create_role(

RoleName=role_name,

AssumeRolePolicyDocument=assume_role_policy_document,

)

iam.attach_role_policy(RoleName=role_name, PolicyArn=policy_arn)

print(f"Created IAM Role: {role_name}")

except iam.exceptions.EntityAlreadyExistsException:

print(f"IAM Role {role_name} already exists.")

return f"arn:aws:iam::{boto3.client('sts').get_caller_identity().get('Account')}:role/{role_name}"

# Function to attach custom policy to the role

def attach_custom_policy_to_role(role_name, policy_name, policy_document):

try:

policy_response = iam.create_policy(

PolicyName=policy_name,

PolicyDocument=json.dumps(policy_document)

)

iam.attach_role_policy(

RoleName=role_name,

PolicyArn=policy_response['Policy']['Arn']

)

print(f"Attached custom policy {policy_name} to role {role_name}.")

except iam.exceptions.EntityAlreadyExistsException:

policy_arn = f"arn:aws:iam::{boto3.client('sts').get_caller_identity().get('Account')}:policy/{policy_name}"

iam.attach_role_policy(

RoleName=role_name,

PolicyArn=policy_arn

)

print(f"Custom policy {policy_name} already exists and attached to role {role_name}.")

# Create CodeCommit repository

create_codecommit_repo(REPO_NAME)

# Create S3 bucket for artifacts

create_s3_bucket(ARTIFACT_BUCKET)

# Create IAM roles for CodeBuild, CodeDeploy, and CodePipeline

codebuild_role_arn = create_iam_role('codebuild-service-role', 'arn:aws:iam::aws:policy/AWSCodeBuildAdminAccess',

'codebuild.amazonaws.com')

codedeploy_role_arn = create_iam_role('codedeploy-service-role',

'arn:aws:iam::aws:policy/service-role/AWSCodeDeployRole',

'codedeploy.amazonaws.com')

codepipeline_role_arn = create_iam_role('codepipeline-service-role',

'arn:aws:iam::aws:policy/AWSCodePipelineFullAccess',

'codepipeline.amazonaws.com')

# Attach custom policies to codepipeline-service-role

custom_policies = {

"CodePipelineCodeCommitAccessPolicy": {

"Version": "2012-10-17",

"Statement": [

{

"Effect": "Allow",

"Action": [

"codecommit:GitPull",

"codecommit:GetBranch",

"codecommit:GetCommit",

"codecommit:ListRepositories",

"codecommit:ListBranches"

],

"Resource": f"arn:aws:codecommit:{REGION}:{boto3.client('sts').get_caller_identity().get('Account')}:{REPO_NAME}"

}

]

},

"CodePipelineS3AccessPolicy": {

"Version": "2012-10-17",

"Statement": [

{

"Effect": "Allow",

"Action": [

"s3:GetObject",

"s3:PutObject",

"s3:PutObjectAcl",

"s3:GetBucketLocation",

"s3:ListBucket",

"s3:GetObjectVersion"

],

"Resource": [

f"arn:aws:s3:::{ARTIFACT_BUCKET}",

f"arn:aws:s3:::{ARTIFACT_BUCKET}/*"

]

}

]

},

"CodePipelineCloudWatchLogsPolicy": {

"Version": "2012-10-17",

"Statement": [

{

"Effect": "Allow",

"Action": [

"logs:CreateLogGroup",

"logs:CreateLogStream",

"logs:PutLogEvents"

],

"Resource": [

f"arn:aws:logs:{REGION}:{boto3.client('sts').get_caller_identity().get('Account')}:log-group:/aws/codebuild/{BUILD_PROJECT_NAME}",

f"arn:aws:logs:{REGION}:{boto3.client('sts').get_caller_identity().get('Account')}:log-group:/aws/codebuild/{BUILD_PROJECT_NAME}:*"

]

}

]

},

"CodePipelineCodeBuildAccessPolicy": {

"Version": "2012-10-17",

"Statement": [

{

"Effect": "Allow",

"Action": [

"codebuild:BatchGetBuilds",

"codebuild:StartBuild"

],

"Resource": f"arn:aws:codebuild:{REGION}:{boto3.client('sts').get_caller_identity().get('Account')}:project/{BUILD_PROJECT_NAME}"

}

]

},

"CodePipelineCodeDeployAccessPolicy": {

"Version": "2012-10-17",

"Statement": [

{

"Effect": "Allow",

"Action": [

"codedeploy:CreateDeployment",

"codedeploy:GetApplicationRevision",

"codedeploy:GetDeployment",

"codedeploy:GetDeploymentConfig",

"codedeploy:RegisterApplicationRevision"

],

"Resource": f"arn:aws:codedeploy:{REGION}:{boto3.client('sts').get_caller_identity().get('Account')}:deploymentgroup:{DEPLOY_APP_NAME}/{DEPLOY_GROUP_NAME}"

}

]

}

}

for policy_name, policy_document in custom_policies.items():

attach_custom_policy_to_role('codepipeline-service-role', policy_name, policy_document)

# Create CodeBuild project

create_codebuild_project(BUILD_PROJECT_NAME, ARTIFACT_BUCKET, codebuild_role_arn)

# Create CodeDeploy application and deployment group

create_codedeploy_app_and_group(DEPLOY_APP_NAME, DEPLOY_GROUP_NAME, codedeploy_role_arn)

# Fetch the Auto Scaling group name associated with the Elastic Beanstalk environment

response = elasticbeanstalk.describe_environment_resources(

EnvironmentName=ENV_NAME

)

auto_scaling_group_name = response['EnvironmentResources']['AutoScalingGroups'][0]['Name']

print(f"Auto Scaling Group Name: {auto_scaling_group_name}")

# Update deployment group with correct EC2 tag filters and Auto Scaling groups

response = codedeploy.update_deployment_group(

applicationName=DEPLOY_APP_NAME,

currentDeploymentGroupName=DEPLOY_GROUP_NAME,

ec2TagFilters=[

{

'Key': 'Name',

'Value': 'CodeDeployDemo',

'Type': 'KEY_AND_VALUE'

}

],

autoScalingGroups=[

auto_scaling_group_name

]

)

print("Deployment group updated:", response)

# Create CodePipeline

create_codepipeline(PIPELINE_NAME, codepipeline_role_arn, ARTIFACT_BUCKET)

Result

The entire CI/CD pipeline was successfully streamlined using AWS CodeCommit and Python scripts, with significant benefits:

– Security: Credentials are securely managed using AWS Secrets Manager.

– Automation: Reduced manual intervention with automated setup and deployment.

– Efficiency: Significant reduction in deployment time.

– Scalability: Easily scalable CI/CD pipeline using AWS services.

Estimated Monthly Cost Analysis

Here is the estimated monthly cost analysis for using AWS infrastructure, compared to on-premises setup and Azure services.

AWS Services:

– CodeCommit: $18.00 per month

– CodeBuild: $2.00 per month

– CodePipeline: $1.00 per month

– Secrets Manager: $0.40 per month

– S3 Storage: $1.15 per month

Total AWS: $22.55 per month

On-Premises Setup:

– Hardware Costs: $83.33 per month (amortized)

– Power and Cooling: $100.00 per month

– Maintenance and Support: $200.00 per month

Total On-Premises: $383.33 per month

Azure Services:

– Azure Repos: $8.00 per month

– Azure DevOps: $4.80 per month

– Azure Pipelines: $1.00 per month

– Azure Key Vault: $0.30 per month

– Azure Blob Storage: $0.92 per month

Total Azure: $15.02 per month

Conclusion

This POC showcases the efficiency, security, and cost-effectiveness of using AWS CodeCommit and Python scripts for CI/CD pipeline automation. By leveraging AWS services, we achieved a streamlined and secure deployment process with a lower cost compared to on-premises setup and a similar cost structure as Azure services.

Developer icons created by Paul J. – Flaticon